Causal World Models: Bridging AI and Human Expertise

AI systems are rapidly evolving beyond simple question answering to tackling complex reasoning tasks. These advances are fueled by training on math and coding problems, where the outcomes are easily verified and the models can learn to reason . But the real world is messy! Applied to broader reasoning tasks, these approaches tend to flounder. For instance, when OpenAI’s Deep research with o1-pro is asked “What is the predicted impact of reduced immigration on US inflation?”, we get a wall of text that describes the factors at a high level without the nuanced reasoning that a human expert would bring to the table.

Human experts have the unique ability to filter out noise and focus on the most critical 0.1% of information. This ability partially derives from expert capacity to build and act upon sophisticated mental models of the world based on years of practice and experimentation. In finance, this type of rapid and accurate decision-making is paramount. Experts depend on their mental models to respond to new economic shifts and deal with massive information overload—often before these changes are priced into the market.

While there is no verifiable “right” answer for messy real-world reasoning tasks, thinking critically about the process of arriving at an answer can be a powerful tool for both humans and AI 2. Can we leverage this intuition to build reasoning systems that meaningfully reason about the real world, without access to an objective verifier?

At Samaya, we are pioneering AI systems that build causal world models and emphasize the process of arriving at the right answer. There are 3 key ideas:

We built a research prototype to predict data-driven quantitative economic outcomes as a result of an anticipated economic change. When asked to “predict the impact of reduced immigration on US inflation” the system iteratively creates a causal world model to reason about this major economic change.

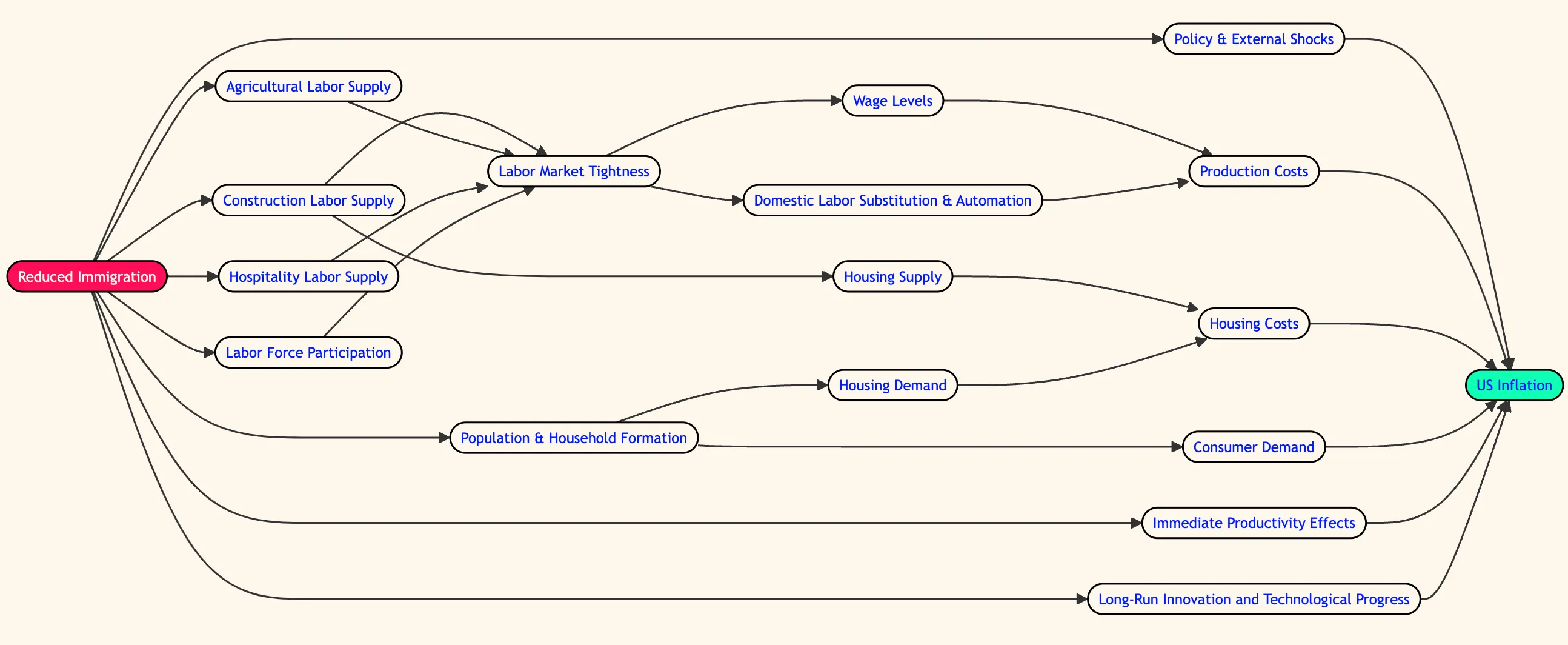

Figure 1: Causal world model that establishes various ways in which reduced immigration will affect US inflation

The AI traces through recent and historical data regarding labor supply constraints, housing costs, consumer demand, production costs, short-term productivity effects etc. and creates mathematical equations that account for all causal pathways. In total, for this query, the system identified 1100 relevant snippets of information from millions of documents. To present to a concise model, it distilled this corpus to 189 highly relevant snippets. Finally, it built a data-driven, mathematical model accounting for ~100 variables and their relationships. Our system created an interactive, auditable and modifiable artifact that enables a financial expert to collaborate.

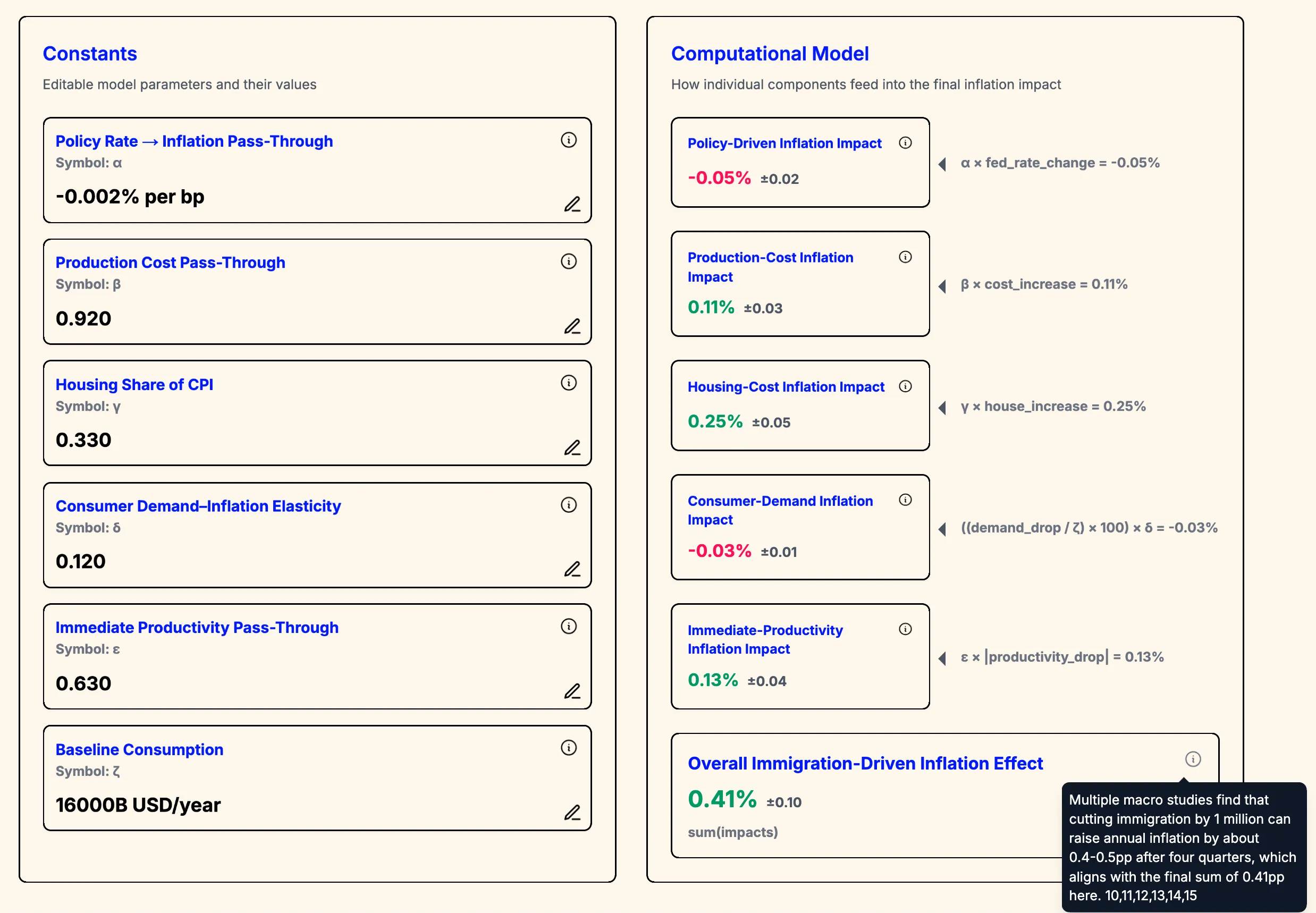

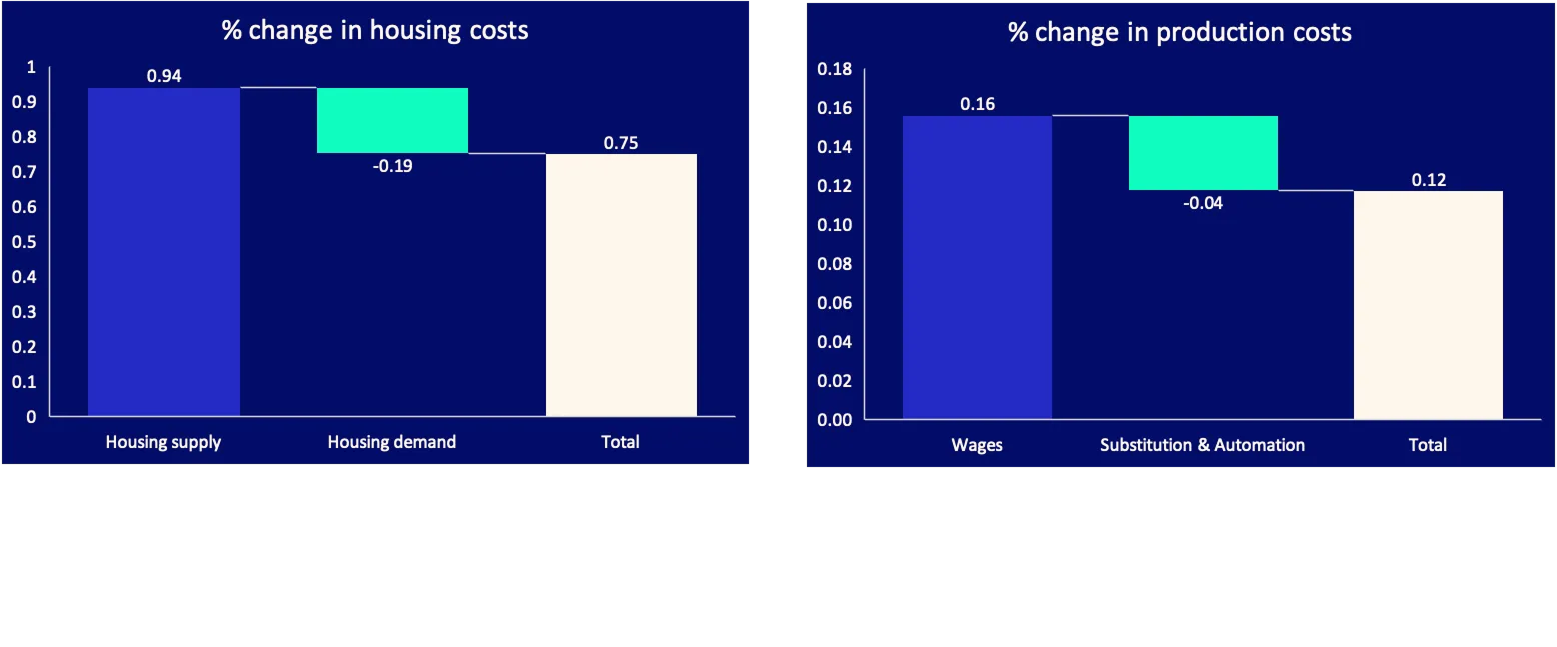

Our system produces the following model for estimating effect on inflation. We compute all the input variables and the pass-through rates that modulate the effect on inflation. This is a great starting point for analysts to overlay their expert opinion and change assumptions.

Figure 2: The set of variables directly affecting US inflation, computed by traversing all causal pathways

Figure 3: An interactive quantitative model which estimates the effect of reduced immigration on US inflation based on the causal world model

As an interesting case study, the causal world model brings together opposing factors and is able to estimate the combined effect, something that o1-pro deep research fails to account for.

Manually performing such a comprehensive process is practically impossible, making it remarkable that the research prototype demonstrates nuanced understanding of a complex, messy world. We envision a future where Samaya’s AI systems create a new computational paradigm and empower experts to achieve unprecedented human-AI collaboration, unlocking answers to questions currently beyond human reasoning.

Ashwin and Thejas conceived and implemented the prototype. Sabrina Hao provided valuable domain expertise. We thank Maithra Raghu, Jack Hessel, Yuhao Zhang, Christos Baziotis, Michele Bevilacqua, Roberto Dessi, Fabio Petroni, and Alexander Baranov for their valuable discussions and feedback.

[1] Deepseek-R1-Zero is trained with accuracy reward for math and LeetCode problems,

[2] “A revealing anecdote shared at one panel highlighted the cultural divide: when AI systems reproduced known mathematical results, mathematicians were excited (seeing this as validation of the system’s capabilities), while AI researchers were disappointed (having hoped for novel discoveries). ”